Overview

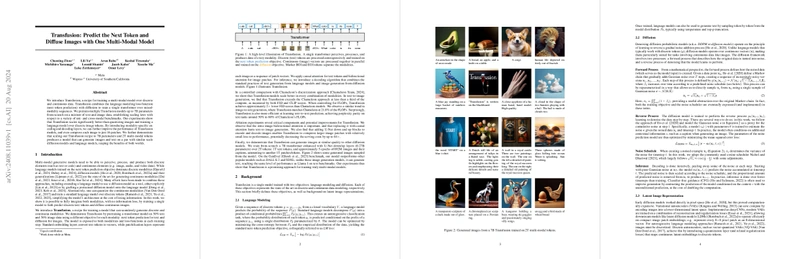

Overview Transfusion is a multi-modal model that integrates text (discrete data) and image (continuous data) generation using a single transformer-based architecture, combining next-token prediction and diffusion processes.

The model outperforms traditional models like Chameleon in scalability and performance, benefiting from modality-specific enhancements such as U-Net blocks and larger image patches to reduce computation costs while maintaining high-quality output.

Experiments demonstrate Transfusion's efficiency across various benchmarks and its potential for large-scale applications, showcasing robustness in both text and image generation tasks and suggesting future research directions in generative modeling.

An Analysis of "Transfusion: Predict the Next Token and Diffuse Images with One Multi-Modal Model"The paper presents a method named "Transfusion" which enables training a multi-modal model for both discrete and continuous data using transformers. Traditionally, generative models have been piecemealed, with language models dominating discrete modalities and diffusion models excelling in continuous modalities. This research proposes a novel integration by leveraging both a language modeling objective for text and a diffusion objective for images within a single transformer-based architecture.

Key ContributionsUnified Multi-modal Framework: Transfusion integrates text and image generation by combining next-token prediction with diffusion in a single architecture. This method is designed to function seamlessly across discrete and continuous data types, accommodating various generation tasks in one model.Scalability and Performance Gains: The paper demonstrates that Transfusion scales efficiently, outperforming traditional models like Chameleon (an image quantization approach) in both discrete and continuous modalities. Notably, Transfusion achieves superior scaling laws, meaning it retains or improves performance with increased data and model size more efficiently than Chameleon.Modality-Specific Enhancements: Experiments reveal that incorporating modality-specific encoding and decoding layers, such as U-Net blocks for images, further boosts performance. Larger image patches significantly reduce computation costs while maintaining or even improving model output quality.Evaluation on Multiple Benchmarks: Transfusion's capability is comprehensively evaluated across several benchmarks. These include perplexity on text corpora (Wikipedia and C4), accuracy on the Llama evaluation suite, and FID/CLIP scores for text-to-image tasks. The model also shows efficiency in image-to-text generation, evidenced by high CIDEr scores on the MS-COCO dataset.Experimental FindingsControlled Comparison with Chameleon:

Using equivalent compute and training setups, Transfusion surpassed Chameleon on all benchmarks. For instance, text-to-image FID scores improved substantially with Transfusion needing significantly fewer FLOPs.Transfusion also exhibited superior text generation capabilities, indirectly benefitting from the diffusion process due to more efficient parameter utilization.Architectural Ablations:

Enabling intra-image bidirectional attention showed significant improvements, particularly in image quality (FID scores).The model's performance with different patch sizes was evaluated, finding that larger patches could efficiently trade off computation while maintaining image quality, especially when using U-Net layers.The U-Net layer as a patch encoder/decoder consistently outperformed simple linear layers, suggesting that the inductive biases of U-Nets are beneficial for high-quality image generation.Large-scale Model Evaluation:

A scaled-up 7B parameter model was trained on a mix of 2T tokens comprising both text and image data. This model outperformed several state-of-the-art image generation models (e.g., SDXL, DeepFloyd) on the GenEval benchmark.The model retained robust text generation abilities, akin to Llama models, validating Transfusion's versatility in handling both text and image modalities effectively.Practical and Theoretical ImplicationsPractical:

Transfusion is a significant step towards developing more integrated and efficient multi-modal models, reducing the need for separate systems for text and image generation.It has potential applications in areas requiring detailed and coherent inter-modal generation, such as creative content creation, automated reporting, and sophisticated AI-assisted design.Theoretical:

This work furthers our understanding of how different model architectures and training objectives can be harmonized to accommodate diverse data types within a unified framework.Investigating the reasons behind the better scaling properties of Transfusion could offer insights into new methods for optimizing large-scale generative models across various data modalities.Future Research DirectionsThe paper hints at several promising research directions stemming from their findings:

Combination with Other Generative Techniques: Exploring advanced generative modeling frameworks, such as flow matching, could further enhance the model's capabilities.Parameter Sharing and Optimization: Understanding the intricacies of parameter utilization between modalities could lead to more efficient multi-modal architectures.Extended Modalities: Expanding Transfusion to include audio and video data, incorporating multi-modal interactions beyond text and image, would be a natural progression.Adaptive Training Objectives: Dynamic tuning of the balancing coefficient (位\lambda位) during training could optimize performance across tasks and datasets more granularly.In conclusion, Transfusion presents a significant advancement in multi-modal generative modeling, setting a new benchmark in the seamless integration of discrete and continuous data generation within a unified framework. This work lays the foundation for future innovations in multi-modal AI, promising more cohesive and versatile generative models.

User Edit Pencil Streamline Icon: https://streamlinehq.comAuthors (10) Chunting Zhou (31 papers) Lili Yu (19 papers) Arun Babu (14 papers) Kushal Tirumala (15 papers) Michihiro Yasunaga (43 papers) Leonid Shamis (1 paper) Jacob Kahn (19 papers) Xuezhe Ma (45 papers) Luke Zettlemoyer (199 papers) Omer Levy (70 papers)Related Papers (10) INTPIX4NA -- new integration-type silicon-on-insulator pixel detector for imaging application (Nishimura et al., 2021) PDF Buffer-Based Distributed LT Codes (Hussain et al., 2014) PDF A Concrete View of Rule 110 Computation (Cook, 2009) PDF The E8 Lattice and Error Correction in Multi-Level Flash Memory (Kurkoski, 2010) PDF Plated-Through-Hole Via Design Specifications for 112G Serial Links (Degerstrom et al., 2023) PDF Epiphany-V: A 1024 processor 64-bit RISC System-On-Chip (Olofsson, 2016) PDF MacWilliams Type identities for $m$-spotty Rosenbloom-Tsfasman weight enumerators over finite commutative Frobenius rings (Shi, 2013) PDF A Data-Centric Approach to Extreme-Scale Ab initio Dissipative Quantum Transport Simulations (Ziogas et al., 2019) PDF Corrigendum to: A Systematic Study of DDR4 DRAM Faults in the Field (Beigi et al., 27 Aug 2024) PDF Logic, Design & Organization of PTVD-SHAM; A Parallel Time Varying & Data Super-helical Access Memory (Alipour, 2007) PDF